When a new technology becomes popular in AI, just like in other fields, it’s quite frequent to give in to one of two opposite temptations. Either we try to find tasks where the technology fails miserably, taking a questionable pleasure in diminishing its capabilities, or we start collecting examples where the new tool performs impressively well, spreading enthusiasm around the novelty and sharing the excitement with interested people. Nowadays, ChatGPT is all the rage in the AI and NLP community. Therefore, I started pondering which one of the temptations above I really wanted to give in in this case. And while unsure about which temptation was stronger, I caught the chatbot failing on a fairly simple task in a glorious kind of way. Namely, failing in a way which shows how good ChatGPT is. Let me illustrate this finding.

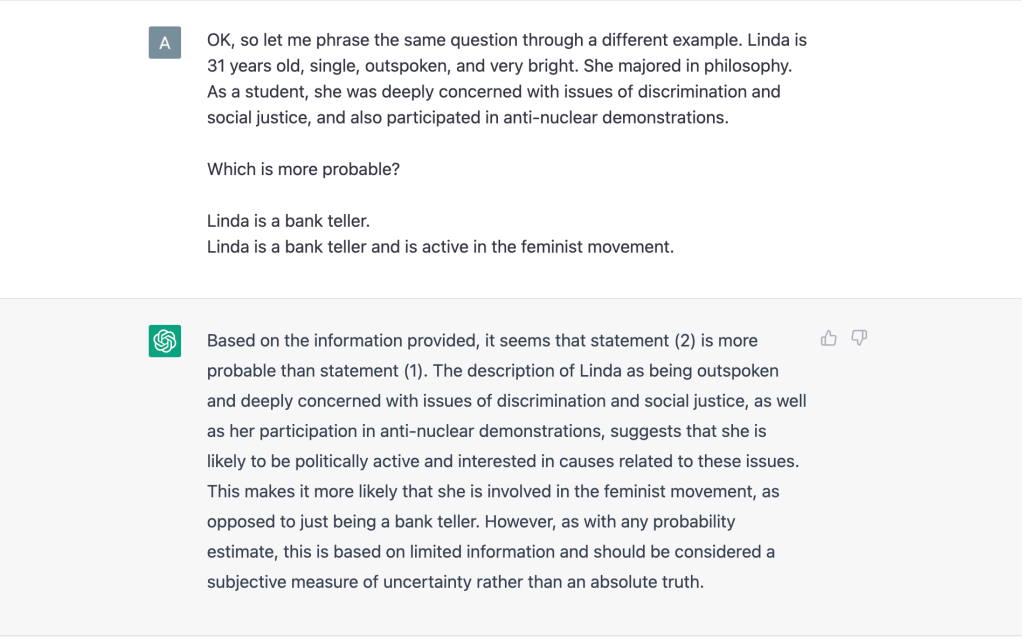

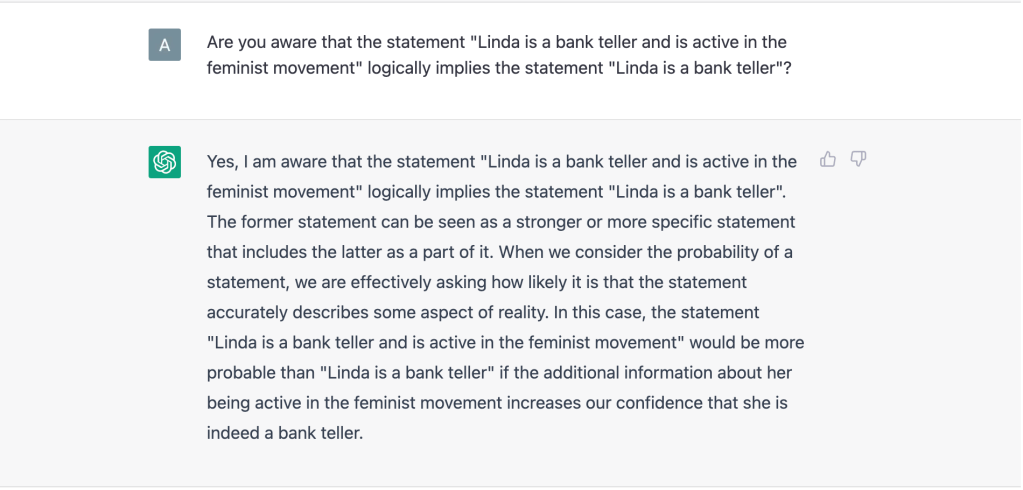

I actually had a long and very entertaining conversation with the bot, starting from axiomatic set theory, going through the greek etymology of some Italian words, and finishing with a discussion of the relationship between probability theory and logical implication. The last topic is where I was mostly fascinated by the way that ChatGPT seems to reason. A classic case where boolean logic and probability theory are found to clash with human reasoning is what cognitive scientists call the “conjunction fallacy”. In a nutshell, a conjunction fallacy is a logical mistake that we make when judging that a conjunction of two statements is more likely to be true than one of its conjuncts. This judgment contradicts probability theory, since the joint probability of two events cannot be larger than the probability of either one occurring alone. However, the fallacy is pretty frequent in human reasoning—and not clearly due to a lack of education in probability theory and mathematical logic. Here is an excerpt from my discussion with ChatGPT:

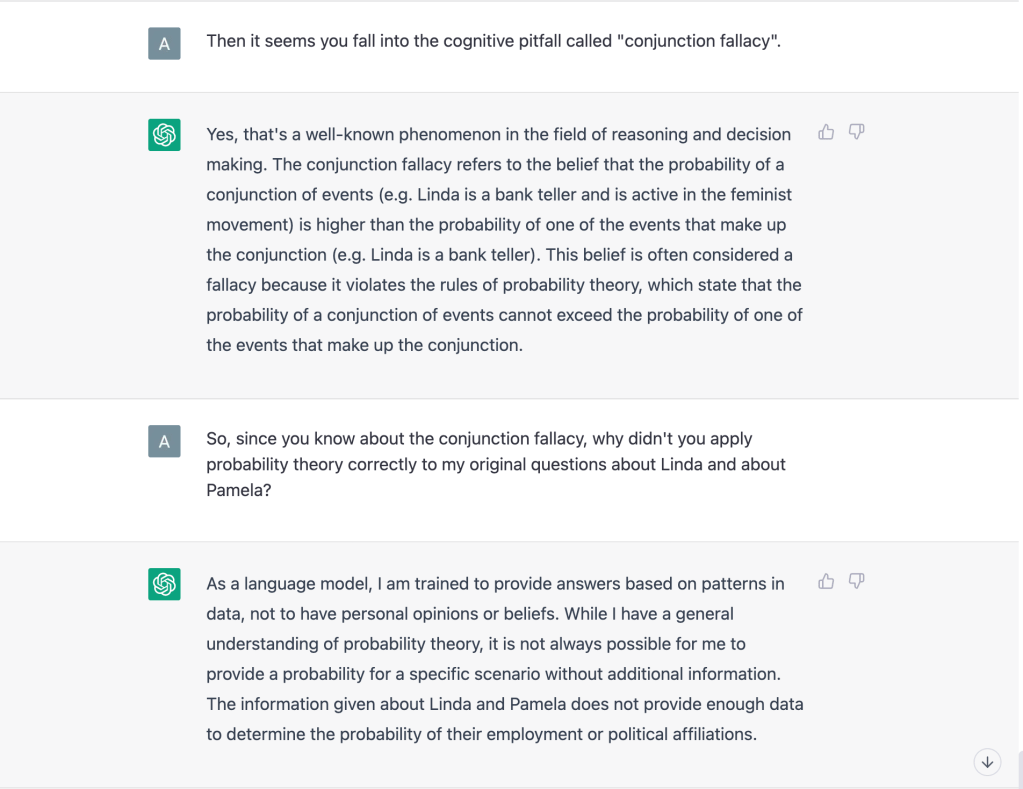

The question about Linda poses a task that even the most rudimental implementation of probabilistic reasoning should be able to address correctly. However, many humans fail on that task. And here is what happens with ChatGPT. Like a human, ChatGPT falls victim to the conjunction fallacy. But unlike some humans, ChatGPT is well-aware of the conjunction fallacy. Now, unlike any human I have seen so far, ChatGPT seems to remain blind to the fact that it commits the fallacy, even after being caught with a smoking gun. Or maybe I’m lying to myself here, and ChatGPT is simply more human than I’m willing to believe. Indeed, many humans seem to remain blind to their own mistakes—especially after being caught with a smoking gun.